A Deep Dive Into User Engagement Through Tricky Averages - Issue 149

Common pitfalls of using averages to measure user engagement

Welcome to the Data Analysis Journal, a weekly newsletter about data science and analytics.

If I told you averages are tricky, you probably know it already. We all know how outliers, variance, and distributions create a misleading story. That being said, as a proxy for 'typical', averages will not disappear from reporting, neither in finance, nor marketing, nor product.

I am here today to remind you of the tricky (very tricky) nature of averages. I’ve been burned by it so many times before, and just recently it happened again (you would think someone learns from past mistakes). I will also demonstrate an example of 3 different approaches to report the same average metric to measure user engagement - which each time leads to a completely different story.

As always, I’m here to remind you how fascinating analytics is, and how we have the power to re-create any story and tailor any stats VCs might want to see. And we should use this skill wisely.

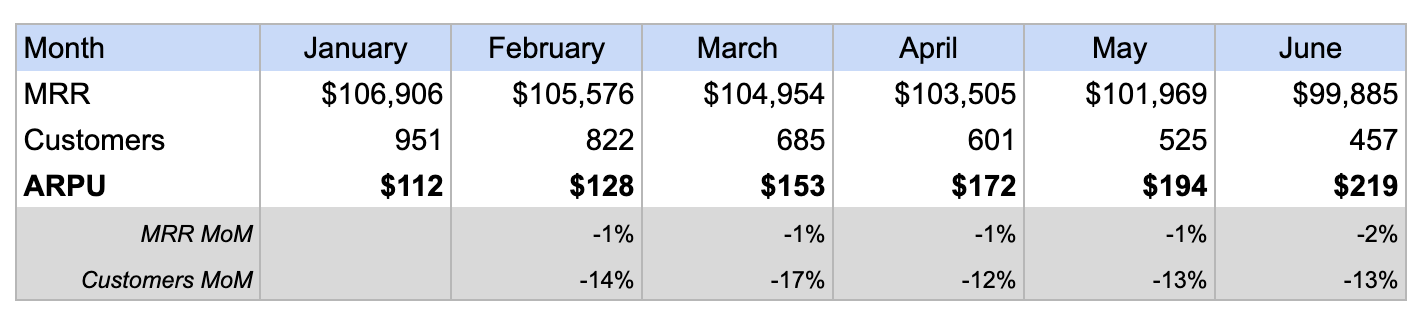

Averages are my pet peeve. I don’t believe it’s safe to read too much into KPIs that are calculated on averages. For example, I shared earlier my concern with ARPC (or ARPU):

Average Revenue Per Customer will rise when you are losing customers. Just think for a moment about this. It’s not only ARPC. When the total audience shrinks, every metric that incorporates “average” and “per user” will increase. Thanks, math!

Below I’ll demonstrate an example of how averages can mislead, trick, or create a completely different story.

A case study on user engagement analysis

Keep reading with a 7-day free trial

Subscribe to Data Analysis Journal to keep reading this post and get 7 days of free access to the full post archives.