Discover more from Data Analysis Journal

Embracing the New Era of Accelerated Testing - Issue 150

How to adapt your data science and analytics teams to support the ever-changing framework of A/B tests successfully.

Hello, and welcome to my Data Analytics Journal, where I write about data science and product analytics.

This month paid subscribers learned about:

Decoding Regression Scores - Linear Regression Part 2: How to read regression plots and interpret the equation that drives analysis and predictions.

How To Prove Causation - If correlation doesn’t imply causation, then what does? Examples of causation analysis, how to use regression for decision-making, and how to recognize when the regression pattern you see is correct and trusted.

A Deep Dive Into User Engagement Through Tricky Averages - A reminder of how averages can be misleading. Math and steps to report “average per user per day” KPI to measure the depth of user engagement.

A few weeks ago, I went to the first reopened DS&ML meetup series organized by WiMLDS and Lyft. One of the delivered talks there about Demonstrating Leadership through AB Testing stuck with me and got me thinking. And thinking.

The talk was great. It was essentially a textbook-like A/B test methodology nicely laid out in a concise and structured way, illustrating foundational aspects with a solid case study shared by the Pandora product analytics team. And yet, when I tried to map the described way of the A/B tests framework into the current development workflow, it was clear it won’t set my team up for success.

The modern boosted “optimization” trend makes me question its methods and wonder if the current school of experimentation is actually set up for it. It seems to me that the way of doing hypothesis testing, which we learned back in statistical class, doesn’t serve us well anymore. In order to succeed, we have to make questionable compromises, break some boundaries, and bend backward to make it all work.

So today I’ll talk about the dissonance between tempting “optimize your app in no time and effort using our tool to grow 10x faster” and being data-driven in its true meaning - making sound decisions based on facts and proven insights that you can trust and validate.

And the biggest question I have on my plate is how do you set the data science and analytics team up for success to make sure you empower this new accelerated “optimization” culture while keeping the confidence and accuracy of the insights you share?

As I mentioned before, A/B testing is often the culprit of tension between analysts and product owners. After reading success stories from Meta (How We Shipped Reactions), LinkedIn (Detecting interference: An A/B test of A/B tests), or Airbnb (Experiments at Airbnb), many product leaders are inspired to follow the trend of constantly iterating with user flows, copies, CTAs positioning, layouts, etc. Then analysts come in and question the test objective, setup, baseline metrics, and experimentation toolkit. Quite often they have to push back on the test launch, delay the test results readout, or even disregard them. They might appear to hinder progress when they’re really just trying to do their job.

Your ability to test is linearly correlated with how well your analytics is set up. This comes down to (A) the testing instrumentation, (B) analytics maturity, and (C) test procedure and protocols.

Now, let’s imagine you have:

The instrumentation of tomorrow that “streamlines your feature flagging” and allows you to “build and deploy in 10 minutes flat” and “easily control multiple flags at once” (Kameleoon, Superwall, LaunchDarkly, DevCycle to name a few).

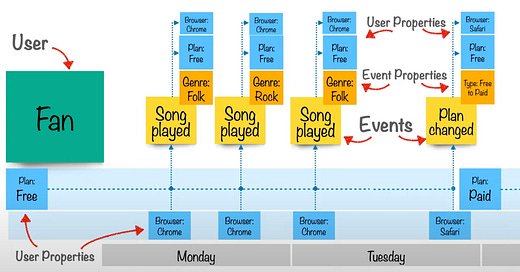

On top of it, let’s pretend you work at some mystical and magical workplace with a solid analytical foundation set, maintained by dedicated full-time data governance champions. You have your events, definitions, data attributes, and schemas in order (don’t believe in tooling for this).

Then what? The success of experimentation will come down to the same old standard:

Test procedure and protocols.

But here’s the thing. The current “academic” workflow we have been taught to follow (and by which I stand by) ironically will fail you once you have a solid instrumentation and dream data&analytics governance. And here’s why.

A/B tests become a never-ending lifecycle of quick optimizations

Testing today, especially on mobile, evolves into a never-ending series of short A/B tests. There is no beginning, no end of a product feature iteration. It's an eternal evolving spiraling cycle that we may never escape from. And this is a new thing aggressively boosted with modern tooling for app development.

From Superwall:

“We actually allow you to iterate an experiment in flight. Let's say you have a (A: 50) (B: 50) setup and B starts winning, you can maintain the existing users in their groups and either (1) Start assigning more users into B to minimize the opportunity cost of the test, while slowly marching to stat sig or (2) Introduce C, while still assigning users to (A:25) (B:25) (C:50) to quickly iterate. It's not a statistically perfect method but allows for directionally correct iteration w/o having to wait for the experiment to complete. It's something some of our most successful teams do.”

Our previous academic approach to data handling and evaluation for a test simply doesn't work anymore. Most analytics teams are not equipped or skilled for this. We were taught to treat every A/B test as a project - create a ticket for it, assign an id for the experiment, estimate the timeline to reach significance, document a case study for each test, have analysis ready, checks, 3-month follow-ups, you know the story.

Now this "old-school" approach doesn't work anymore. It holds you back, it blocks teams, and it's not scalable or efficient.

I am astonished about how today's modern tooling accelerates optimizations. Today’s experimentation enabled by Superwall, Qonversion, Split, and others set the bar very high. Just think about it: a given user is put into 5-10 different tests or "experiences” simultaneously, which are connected, overlap, or worse of all: dependent on each other. For example, you can test the onboarding flow, paywall conversions (which are connected to the onboarding flow), and accessibility of premium features (which are dependent on paywall conversion) for one user concurrently. It's growth-fascinating and statistically-terrifying at the same time.

Before, it was "an error" not to exclude users in your test analysis who got in various tests at the same time. Now, quite often you are forced to keep them to make it significant (mind you, most of the tests have slow rollouts and might be running at small % traffic on one mobile platform). You have to have some type of bias measure - how harmful, how polluted users are. It's not Control vs Variant anymore, it's Test_1 Control vs Test_2 Variant_A vs Test_3 Variant_D and possibly a branchy tree downstream of small samples. Teams don't realize they work with complex multi-variable tests, and winner Variant_B from Test_1 might not be "compatible" with a winner Variant_A from Test_2:

“Simpson’s Paradox:

A trend or result that is present when data is put into groups that reverses or disappears when the data is combined.” read more about Simpson’s Paradox and Interpreting Data.

🚩To reiterate, within a one-time frame, you won't be able to confirm for multiple tests if the lift they demonstrate (a) didn’t happen by chance and (b) is best suitable with all the iterations actively tested.

I don’t intend to block or hinder experimentation. It’s exciting what the latest feature flagging and experimentation tools can do. But by the end of the day, it’s my team's responsibility to represent trust, confidence, and correctness of the test inference. Here are some of the things I am trying out or that proved to back me up.

✅ How to adapt your team to the thriving era of experimentation

Pre/Post analysis: always keep a check on high-level KPI. Regardless of what test impact you see, the ecosystem metrics (usually reported monthly) don’t lie. Set up reminders and follow up in 30 days, or 3 months, and keep it high level. If you can't do it for every test, do it for a series of product iterations - onboarding flow, a new experience for a new feature, the initial activity, etc.

Assign an owner for a product feature (with its baseline metrics): A few years ago, I’d take the total volume of active A/B tests and equally divide it between my analysts, with more complex tests (like new price, product adoption, notification frequency) assigning to senior analysts and more straight forward (onboarding, upsell, UI change tests) to junior. Today, however, I am leaning towards solely dedicating an analyst to a product feature or experience (e.g. onboarding, activation, conversion, engagement, subscriptions) and letting them own their domain with whatever iteration the product team is testing, regardless of its level of complexity.

Automate statistical checks: test validations (variance, distribution, randomness) have to be automated today. Assign a dedicated DS person to oversee statistics across all tests, and let them own and automate it (via views or dashboarding). For example, back in my time at VidIQ, we introduced a consolidated experimentation dashboard that returned us a table with z score, p-value, variance, volume, and user distribution for every active test. It was such a time saver. Don't do manual checks for every test in your impact analysis, it’s not scalable.

Streamline documentation: your team has to simplify the test evaluation read, and it includes both communication on it and documentation. Readjust your expectation for the readout, for example, not a deck per test, but a slide per test. With such a volume of iterations, detailed notebooks and deep-dive impact analysis per test are not realistically doable anymore. We passed that stage when modern SaaS enabled PMs to make production changes with 1 click without coding or deploying.

I live in the past and thus still make attempts to document every product initiative, big or small, to understand how metrics move and user behavior evolves. Moving forward, I am leaning towards documenting only big changes that aim to disrupt user flow or behavior.

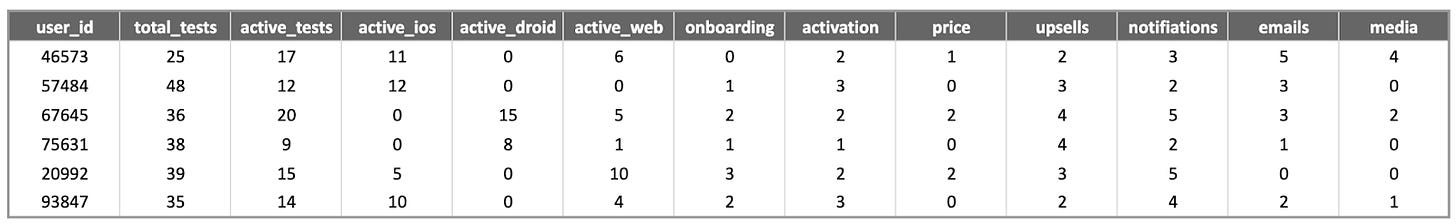

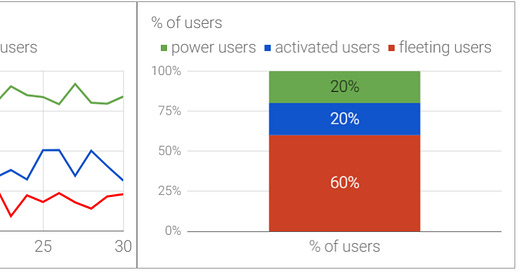

Eliminate the bias: At this very moment, I am brainstorming with my fellow analysts to create a way to quantify the bias of users getting into multiple tests. For example, create a new attribute of a cumulative number of currently active tests per user across revenue, onboarding, activation, core usage, secondary features, etc. I’m envisioning something like this:

If we would have this table, then we would be able to cherry-pick users with the least conflicting test overlaps for the test read. Then, maybe, we could wrap it into a downstream view of something like test_overlap_bias_score being between 1-10. This way, we could use users with 1-2 scores for high-impact test analysis for some important decision-making, and then compare it to users with 5-7 scores. Again, just brainstorming here. This is totally doable for companies with matured analytics.

Or we could try this solution shared by Eppo:

“Another method can be to run a regression every day on a user-level dataset predicting a core metric. In that regression, including a covariance for every active experiment and also every pair of experiments. If the pair-wise experiment coefficients show up as significant to some threshold, you'd see that there's an interaction effect issue.”

Best practice shared by experimentation experts:

“When using Superwall we'll automatically report back to you the inclusion of a user in an experiment. People usually then set that as an attribute on their user model to be able to break down by all the permutations of test variants.”.

“There are a few different ways that help you avoid data contamination and optimize traffic for a large number of simultaneous experiments:

- Run mutually exclusive experiments. Usually, if experiments are located in the same app area and aimed at affecting the same metric, it’s highly recommended not to expose them to the same set of users. Such an approach does require having an advanced splitter at your disposal. However, eventually, you get crystal-clear data. For example, do not test onboarding changes and a journey to the Aha! moment on the same users.

- Expose your user to the experiment only once they reach a specific point in their user flow. For example, if you’re experimenting with a new paywall design, do not add users to the experiment on the app launch but once the paywall is shown.

- Keep a global control group (usually no more than 5%). This approach requires extra effort to maintain and correctly compare with your treatments. However, if you find it workable, you can avoid adding control groups to each experiment in favor of manually extracting the same segment for the comparison from the global control group.

Remember that having relevant users in your groups is crucial. Do not assign to the test those who are unaffected by the new experience at all. For example

- You’re validating the paywall-related monetization hypothesis, which means adding to the test users with active subscriptions is irrelevant.

- You’re testing new logic for guiding your users to the Aha! Moment, then exposing the test to those, who already have passed it, adds only extra noise to your data.”

“There is one area to keep in mind when running parallel experiments. That is the case where two changes directly interfere with one another, creating a different impact on behavior when combined than when in isolation. This happens when experiments are being run on the same page, the same user flow, etc. To avoid interaction effects, review concurrent experiments for interactions: use tags and naming conventions to track changed areas, manually test changes as part of the rollout, and look for other feature flags in nearby code. You should also design colliding tests to highlight interactions and compare each variant’s performance against one another.”

Remote configuration and rapid feature flagging open a new age of A/B testing. Nowadays, most experimentation toolkits are flexible and configurable. They empower any product leader to pretend that they have enough design and DS resources and can unleash their power of product iterations in no time. As exciting as that sounds, that means, as a data scientist, you won’t have the luxury of calculating the timeline for reaching significance, running the post-rollout impact check, working on a communication plan for every test, or creating a case study deck for every product change. Brace for a tsunami of a wild mix of kinda-test and not-a-full-rollout launches. And then: learn to swim in it.

Thanks for reading, everyone. Until next Wednesday!

Related publications:

Subscribe to Data Analysis Journal

Where product, data science, and analytics intersect. Trusted by tens of thousands of data scientists around the world