How To Find Optimal Proxy Metrics - Issue 197

Using proxy metrics for measuring and quantifying the impact of a product rollout or a new feature.

Welcome to the Data Analysis Journal, a weekly newsletter about data science and analytics.

Teams often mistakenly evaluate A/B tests against North Star metrics or business KPIs, such as user retention, customer churn, revenue, or LTV. This approach is flawed for 2 reasons:

First, business metrics are not sensitive, meaning any lift created by an A/B test should not be reflected in KPIs (unless your MDE is over 50%).

Second, business metrics are designed to resist short-term changes from A/B tests and to reflect only long-term impacts.

Despite this, using business KPIs to measure the effectiveness of A/B tests remains a common practice. To address this issue, researchers from Google, Stanford, and the Department of Statistical Science at Duke University collaborated on a study to explain why you should use sensitive proxy metrics for A/B tests instead of the North Star or business KPIs.

They introduced the concept of Pareto Optimal Proxy Metrics, which significantly optimize the accuracy and sensitivity of lift predictions. Let’s dive into why business core KPIs aren’t suitable for A/B tests and how to select the appropriate proxy metric for measuring product rollouts or feature optimization.

Product is a subset of the business.

If you have been reading my newsletter, you're already familiar with how I classify all metrics into three layers in product analytics:

1. Success metrics

Success metrics are 3-4 data points that directly illustrate the change in a user behavior affected by a product launch or a test. It can be the total or the average number of user actions, number of sales or transactions, click-through rate, monthly/annual subscription ratio, or else.

2. Ecosystem metrics

Ecosystem metrics are typically top or core KPIs. These are high-level business metrics that often involve calculations, specific date window logic, and filters. This can be MAU, MRR, Churn rate, or the North Star.

3. Dark side / Tradeoff metrics

Tradeoff, tension, or counter metrics are meant to show a negative effect of a product change that you want to keep your eyes on. For example, it can be % unsubscribes, refunds, reports of spam, or fraud. As I said earlier, if your product change increases usage in one feature, you are likely to see a decline in another. Users shift where they spend time. This metric helps you to measure the balance and fully understand the impact.

Success metrics are not Ecosystem metrics.

Bellow, I will reference the Pareto optimal proxy metrics study conducted by data scientists Lee Richardson, Alessandro Zito, Jacopo Soriano, and Dylan Greaves.

“North star metrics are central to the operations of technology companies like Airbnb, Uber, and Google, amongst many others. Functionally, teams use north star metrics to align priorities, evaluate progress, and determine if features should be launched. Although north star metrics are valuable, there are issues using north star metrics in experimentation. To understand the issues better, it is important to know how experimentation works at large tech companies.”

Traditional business KPIs are not suitable metrics for assessing the impact of product initiatives. These KPIs primarily serve as ecosystem health indicators meant to describe the state of business and safeguard it against various seasonal, external, and micro effects.

However, when conducting an A/B test, relying on metrics like retention may not accurately reflect the true impact of an initiative. For example, users in the Variant group might return to your app 1% more than those in the Control group, but 62% of them could also be influenced by other hidden effects you can’t capture or measure. These could include phone settings, a new viral video on TikTok, or unrelated factors like the plum season or a time change.

Remember the case study from Strava on how they boosted user engagement through a redesign of the Route Detail page? For the A/B test, while they aimed to improve user retention in the app, they chose to use very sensitive, granular metrics such as average page views per user, saving routes, downloading routes, recording routes, and others, rather than relying on broader measures like week-over-week product usage or retention.

Retention is an ecosystem KPI. It is not sensitive to isolated fluctuation or noise. It isn’t designed to be sensitive because it’s an output metric (read Common Mistakes In Defining Metrics by Brian Balfour). However, clicks, page opens, and transactions are good for capturing immediate responses and variations, thus offering a more nuanced view of specific actions or behaviors.

Finding optimal proxy metrics

What is a proxy metric?

“The ideal proxy metric is short-term sensitive, and an accurate predictor of the long-term impact of the north star metric.”

Here are 2 scenarios where the proxy metric helps teams overcome the limitations of the North Star metric:

Using business KPIs or North Star metrics for A/B testing has 2 main concerns:

1. Ecosystem core metrics are not sensitive.

Sensitivity refers to the metric’s ability to detect a statistically significant effect, often associated with statistical power. If the metric is not sensitive, it means that the test results do not clearly indicate whether the hypothesis is improving or degrading the KPI.

“For example, metrics related to Search quality will be more sensitive in Search experiments, and less sensitive in experiments from other product areas (notifications, home feed recommendations, etc.).”

2. Business metrics are not directional to user behavior or experience

“Through directionality, we want to capture the alignment between the increase (decrease) in the metric and long-term improvement (deterioration) of the user 4 experience. While this is ideal, getting ground truth data for directionality can be complex.”

Researchers suggest several methods to measure directionality by comparing the short-term value of a metric against the long-term value of a KPI.

“The advantage of this approach is that we can compute the measure in every experiment. The disadvantage is that the estimate of the treatment effect of the north star metric is noisy, which makes it harder to separate the correlation in noise from the correlation in the treatment effects. This can be handled, however, by measuring correlation across repeated experiments.”

Essentially, you need to run correlations between a proxy metric and a KPI, measuring their linear relationship (or it can be a Spearman correlation).

However, there’s a catch: there is an inverse relationship between sensitivity and directionality:

Finding the right proxy metric involves balancing sensitivity and directionality: the more you increase sensitivity, the less likely the proxy metric will relate to business KPIs. This is a very frustrating aspect of product experimentation. Basically, the faster you can detect a significant lift in a success metric, the further it may be from reflecting true ecosystem KPI improvements, and vice versa.

Researchers have proposed a new method for identifying proxy metrics that optimizes the trade-off between sensitivity and directionality using a Pareto optimal framework (or Pareto efficiency), a concept from economics and statistics used for problem optimization when dealing with multiple functions or factors. They tested 3 algorithms on over 500 experiments conducted over a period of 6 months. This testing aimed to compare the proxy metric versus the North Star metric, using binary sensitivity and the proxy score to assess the quality of the proxy metric.

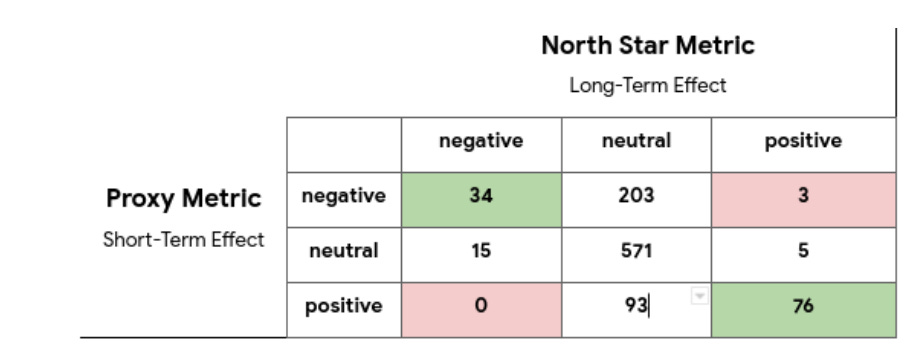

“The key idea is that the proxy score rewards both sensitivity, and accurate directionality. More sensitive metrics are more likely to be in the first and third rows, where they can accumulate reward. But metrics in the first and third rows can only accumulate reward if they are in the correct direction. Thus, the proxy score rewards both sensitivity and directionality. Microsoft independently developed a similar score, called Label Agreement”

Read more about their study - Pareto Optimal Proxy Metrics.

I'm not aware of any tool allowing product owners to quickly evaluate the relationship between proxy metrics and business KPIs, guiding them toward the appropriate success metric. Ideally, you would have a fellow analyst on your side who can help translate each business KPI into suitable proxy metrics. These metrics can then be used to measure the effectiveness of product initiatives. For example:

Monthly retention → screen views or particular clicks.

MRR/ARR → successful transactions or completed payments.

Churn → cancellations, requests to cancel, or unsubscribes.

Subscription renewals → successful payments or transactions.

I am fascinated by this study. Not only is it timely, but it's also easy to understand and interpret. It addressed long-standing pain points we deal with in experimentation - why are improvements in the Variant not reflected in ecosystem core metrics? Why do these improvements only become visible in KPIs 4 - 5 months after the test is completed? And why every method attempting to quantify the relationship between the success proxy metric and the business KPI seems destined to fail.

Thanks for reading, everyone. Until next Wednesday!

I think DoubleLoop might be able to do this